GreatExam dumps for 70-463 exam are written to the highest standards of technical accuracy, provided by our certified subject matter experts and published authors for development. We guarantee the best quality and accuracy of our products. We hope you pass the exams successfully with our practice test. With our Microsoft 70-463 practice test, you will pass your exam easily at the first attempt. You can also enjoy 365 days free update for your product.

QUESTION 201

Drag and Drop Questions

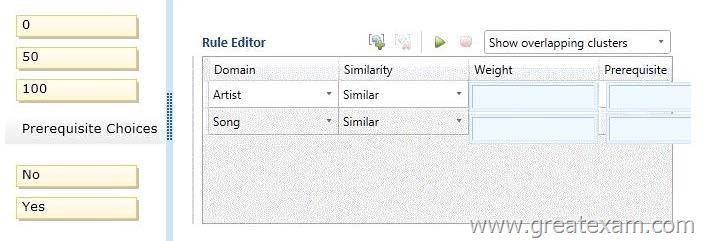

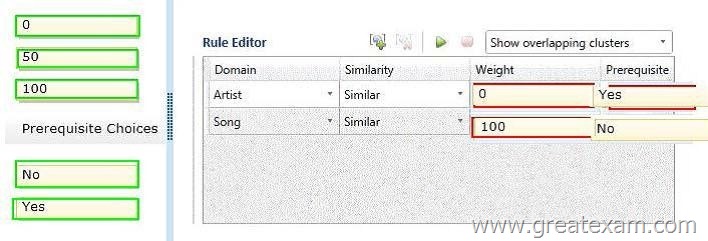

You are loading a dataset into SQL Server.

The dataset contains numerous duplicates for the Artist and Song columns.

The values in the Artist column in the dataset must exactly match the values in the Artist domain in the knowledge base. The values in the Song column in the dataset can be a close match with the values in the Song domain.

You need to use SQL Server Data Quality Services (DQS) to define a matching policy rule to identify duplicates.

How should you configure the Rule Editor? (To answer, drag the appropriate answers to the answer area.)

QUESTION 202

Hotspot Questions

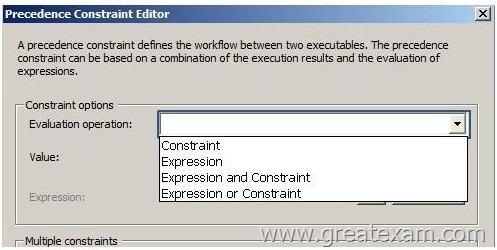

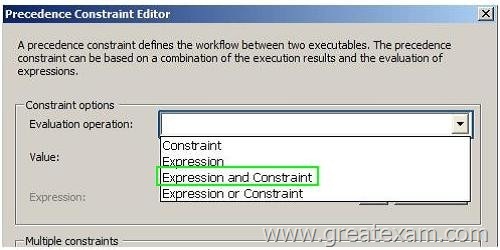

You are developing a SQL Server Integration Services (SSIS) package.

An Execute SQL task in the package checks product stock levels and sets a package variable named InStock to TRUE or FALSE depending on the stock level found.

After the successful execution of the Execute SQL task, one of two data flow tasks must run, depending on the value of the InStock variable.

You need to set the precedence constraints.

Which value for the evaluation operation should you use? (To answer, select the appropriate option for the evaluation operation in the answer area.)

Answer:

QUESTION 203

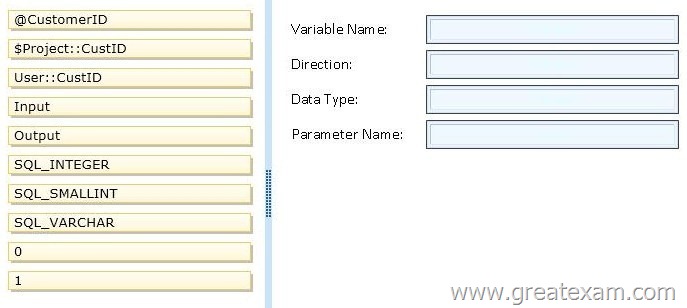

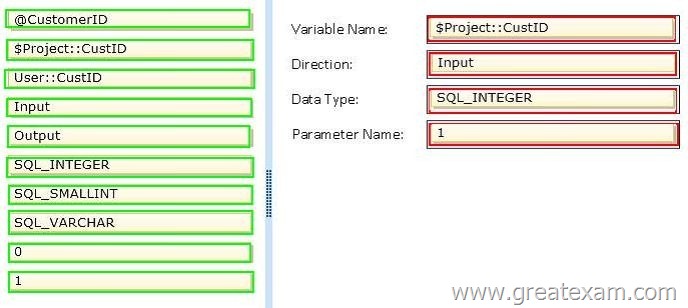

Drag and Drop Questions

You are developing a SQL Server Integration Services (SSIS) package that downloads data from a Windows Azure SQL Database database.

A stored procedure will be called in an Execute SQL task by using an ODBC connection.

This stored procedure has only the @CustomerID parameter of type INT.

A project parameter named CustID will be mapped to the stored procedure parameter @CustomerID.

You need to ensure that the value of the CustID parameter is passed to the @CustomerID stored procedure parameter.

In the Parameter Mapping tab of the Execute SQL task editor, how should you configure the parameter? (To answer, drag the appropriate option or options to the correct location or locations in the answer area.)

Answer:

QUESTION 204

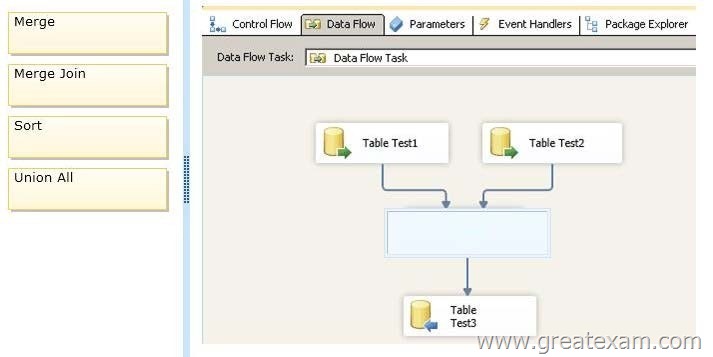

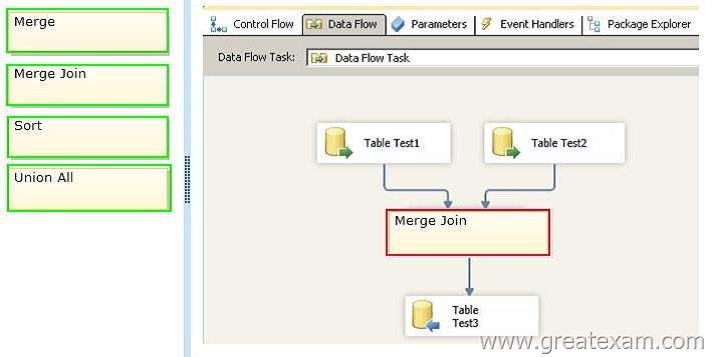

Drag and Drop Questions

You are developing a SQL Server Integration Services (SSIS) package that loads data into a data warehouse hosted on Windows Azure SQL Database.

You must combine two data sources together by using the ProductID column to provide complete details for each record.

The data retrieved from each data source is sorted in ascending order by the ProductID column.

You need to develop a data flow that imports the data while meeting the requirements.

How should you develop the data flow? (To answer, drag the appropriate transformation from the list of transformations to the correct location in the answer area.)

Answer:

QUESTION 205

Drag and Drop Questions

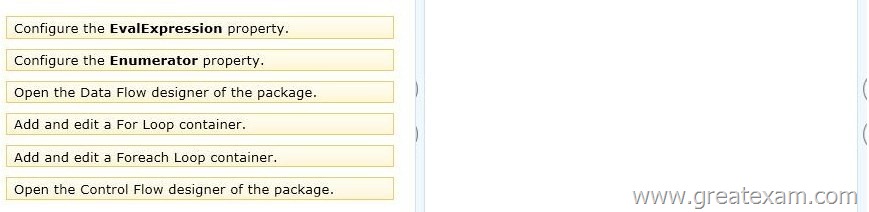

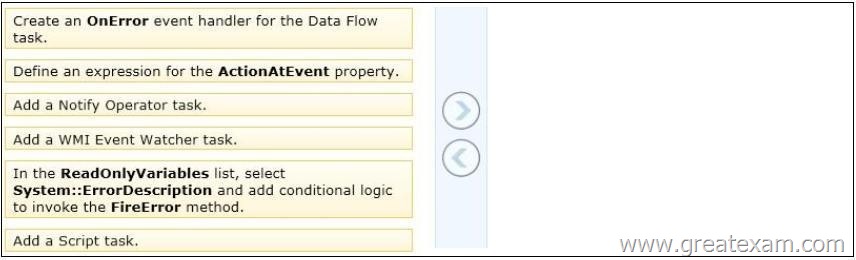

You are developing a SQL Server Integration Services (SSIS) package.

The package contains several tasks that must repeat until an expression evaluates to FALSE.

You need to add and configure a container to enable this design.

Which three actions should you perform in sequence? (To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order,)

Answer:

QUESTION 206

You are designing a data warehouse with two fact tables.

The first table contains sales per month and the second table contains orders per day.

Referential integrity must be enforced declaratively.

You need to design a solution that can join a single time dimension to both fact tables.

What should you do?

A. Create a view on the sales table.

B. Partition the fact tables by day.

C. Create a surrogate key for the time dimension.

D. Change the level of granularity in both fact tables to be the same.

Answer: D

Explanation:

In order to join the facts table in time dimension we cannot have two different time measures (time and day).

QUESTION 207

You are deploying a new SQL Server Integration Services (SSIS) package to several servers.

The package must meet the following requirements:

– .NET Common Language Runtime (CLR) integration in SQL Server must not be enabled.

– The Connection Managers used in the package must be configurable without editing the package.

– The deployment procedure must be automated as much as possible.

You need to set up a deployment strategy that meets the requirements.

What should you do?

A. Use the gacutil command.

B. Use the dtutil/copy command.

C. Use the Project Deployment Wizard.

D. Create an OnError event handler.

E. Create a reusable custom logging component.

F. Run the package by using the dtexec/rep/conn command.

G. Run the package by using the dtexec/dumperror/conn command.

H. Run the package by using the dtexecui.exe utility and the SQL Log provider.

I. Add a data tap on the output of a component in the package data flow.

J. Deploy the package by using an msi file.

K. Deploy the package to the Integration Services catalog by using dtutil and use SQL Server to store the configuration.

Answer: B

QUESTION 208

You maintain a SQL Server Integration Services (SSIS) package.

The package was developed by using SQL Server 2008 Business Intelligence Development Studio (BIDS).

The package includes custom scripts that must be upgraded.

You need to upgrade the package to SQL Server 2012.

Which tool should you use?

A. SQL Server dtexec utility (dtexec.exe)

B. SQL Server DTExecUI utility (dtexecui.exe)

C. SSIS Upgrade Wizard in SQL Server Data Tools

D. SQL Server Integration Services Deployment Wizard

Answer: C

Explanation:

Use the SSIS Package Upgrade Wizard to upgrade SQL Server 2005 Integration Services (SSIS) packages and SQL Server 2008 Integration Services (SSIS) packages to the package format for the current (2012) release of SQL Server Integration Services.

QUESTION 209

You administer a SQL Server Integration Services (SSIS) solution in the SSIS catalog.

A SQL Server Agent job is used to execute a package daily with the basic logging level.

Recently, the package execution failed because of a primary key violation when the package inserted data into the destination table.

You need to identify all previous times that the package execution failed because of a primary key violation.

What should you do?

A. Use an event handler for OnError for the package.

B. Use an event handler for OnError for each data flow task.

C. Use an event handler for OnTaskFailed for the package.

D. View the job history for the SQL Server Agent job.

E. View the All Messages subsection of the All Executions report for the package.

F. Store the System::SourceID variable in the custom log table.

G. Store the System::ServerExecutionID variable in the custom log table.

H. Store the System::ExecutionInstanceGUID variable in the custom log table.

I. Enable the SSIS log provider for SQL Server for OnError in the package control flow.

J. Enable the SSIS log provider for SQL Server for OnTaskFailed in the package control flow.

K. Deploy the project by using dtutil.exe with the /COPY DTS option.

L. Deploy the project by using dtutil.exe with the /COPY SQL option.

M. Deploy the .ispac file by using the Integration Services Deployment Wizard.

N. Create a SQL Server Agent job to execute the SSISDB.catalog.validate_project stored procedure.

O. Create a SQL Server Agent job to execute the SSISDB.catalog.validate_package stored procedure.

P. Create a SQL Server Agent job to execute the SSISDB.catalog.create_execution and

SSISDB.catalog. start_execution stored procedures.

Q. Create a table to store error information. Create an error output on each data flow destination that writes OnError event text to the table.

R. Create a table to store error information. Create an error output on each data flow destination that writes OnTaskFailed event text to the table.

Answer: E

QUESTION 210

You are developing a SQL Server Integration Services (SSIS) package to load data into a Windows Azure SQL Database database.

The package consists of several data flow tasks.

The package has the following auditing requirements:

– If a data flow task fails, a Transact-SQL (T-SQL) script must be executed.

– The T-SQL script must be executed only once per data flow task that fails, regardless of the nature of the error.

You need to ensure that auditing is configured to meet these requirements.

What should you do?

A. Use an event handler for OnError for the package.

B. Use an event handler for OnError for each data flow task.

C. Use an event handler for OnTaskFailed for the package.

D. View the job history for the SQL Server Agent job.

E. View the All Messages subsection of the All Executions report for the package.

F. Store the System::SourceID variable in the custom log table.

G. Store the System::ServerExecutionID variable in the custom log table.

H. Store the System::ExecutionInstanceGUID variable in the custom log table.

I. Enable the SSIS log provider for SQL Server for OnError in the package control flow.

J. Enable the SSIS log provider for SQL Server for OnTaskFailed in the package control flow.

K. Deploy the project by using dtutil.exe with the /COPY DTS option.

L. Deploy the project by using dtutil.exe with the /COPY SQL option.

M. Deploy the .ispac file by using the Integration Services Deployment Wizard.

N. Create a SQL Server Agent job to execute the SSISDB.catalog.va!idate_project stored procedure.

O. Create a SQL Server Agent job to execute the SSISDB.catalog.validate_package stored procedure.

P. Create a SQL Server Agent job to execute the SSISDB.catalog.create_execution and

SSISDB.catalog. start_execution stored procedures.

Q. Create a table to store error information. Create an error output on each data flow destination that writes OnTaskFailed event text to the table.

Answer: C

QUESTION 211

You are developing a SQL Server Integration Services (SSIS) project with multiple packages to copy data to a Windows Azure SQL Database database.

An automated process must validate all related Environment references, parameter data types, package references, and referenced assemblies.

The automated process must run on a regular schedule.

You need to establish the automated validation process by using the least amount of administrative effort.

What should you do?

A. Use an event handler for OnError for the package.

B. Use an event handler for OnError for each data flow task.

C. Use an event handler for OnTaskFailed for the package.

D. View the job history for the SQL Server Agent job.

E. View the All Messages subsection of the All Executions report for the package.

F. Store the System::SourceID variable in the custom log table.

G. Store the System::ServerExecutionID variable in the custom log table.

H. Store the System::ExecutionInstanceGUID variable in the custom log table.

I. Enable the SSIS log provider for SQL Server for OnError in the package control flow.

J. Enable the SSIS log provider for SQL Server for OnTaskFailed in the package control flow.

K. Deploy the project by using dtutil.exe with the /COPY DTS option.

L. Deploy the project by using dtutil.exe with the /COPY SQL option.

M. Deploy the .ispac file by using the Integration Services Deployment Wizard.

N. Create a SQL Server Agent job to execute the SSISDB.catalog.validate_project stored procedure.

O. Create a SQL Server Agent job to execute the SSISDB.catalog.validate_package stored procedure.

P. Create a SQL Server Agent job to execute the SSISDB.catalog.create_execution and

SSISDB.catalog. start_execution stored procedures.

Q. Create a table to store error information. Create an error output on each data flow destination that writes OnError event text to the table.

R. Create a table to store error information. Create an error output on each data flow destination that writes OnTaskFailed event text to the table.

Answer: N

QUESTION 212

You are developing a SQL Server Integration Services (SSIS) project by using the Project Deployment Model. All packages in the project must log custom messages.

You need to produce reports that combine the custom log messages with the system generated log messages.

What should you do?

A. Use an event handler for OnError for the package.

B. Use an event handler for OnError for each data flow task.

C. Use an event handler for OnTaskFailed for the package.

D. View the job history for the SQL Server Agent job.

E. View the All Messages subsection of the All Executions report for the package.

F. Store the System::SourceID variable in the custom log table.

G. Store the System::ServerExecutionID variable in the custom log table.

H. Store the System::ExecutionInstanceGUID variable in the custom log table.

I. Enable the SSIS log provider for SQL Server for OnError in the package control flow.

J. Enable the SSIS log provider for SQL Server for OnTaskFailed in the package control flow,

K. Deploy the project by using dtutil.exe with the /COPY DTS option.

L. Deploy the project by using dtutil.exe with the /COPY SQL option.

M. Deploy the .ispac file by using the Integration Services Deployment Wizard.

N. Create a SQL Server Agent job to execute the SSISDB.catalog.validate_project stored procedure.

O. Create a SQL Server Agent job to execute the SSISDB.catalog.validate_package stored procedure.

P. Create a SQL Server Agent job to execute the SSISDB.catalog.create_execution and

SSISDB.catalog. start_execution stored procedures.

Q. Create a table to store error information. Create an error output on each data flow destination that writes OnError event text to the table.

R. Create a table to store error information. Create an error output on each data flow destination that writes OnTaskFailed event text to the table.

Answer: G

QUESTION 213

You are developing a SQL Server Integration Services (SSIS) package to implement an incremental data load strategy.

The package reads data from a source system that uses the SQL Server change data capture (CDC) feature.

You have added a CDC Source component to the data flow to read changed data from the source system.

You need to add a data flow transformation to redirect rows for separate processing of insert, update, and delete operations.

Which data flow transformation should you use?

A. Audit

B. Merge Join

C. Merge

D. CDC Splitter

Answer: D

Explanation:

The CDC splitter splits a single flow of change rows from a CDC source data flow into different data flows for Insert, Update and Delete operations

http://msdn.microsoft.com/en-us/library/hh758656.aspx

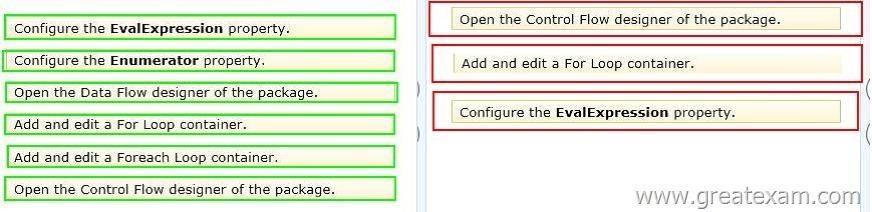

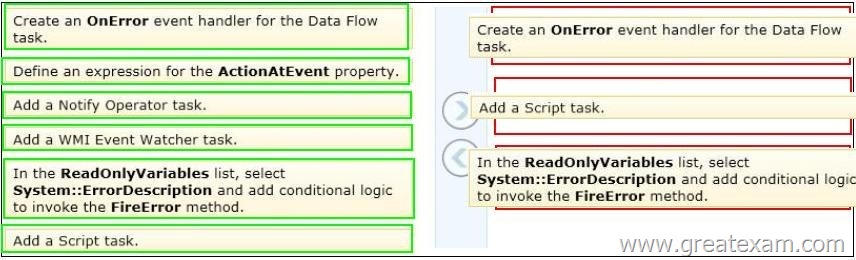

QUESTION 214

Drag and Drop Questions

A Data Flow task in a SQL Server Integration Services (SSIS) package produces run-time errors. You need to edit the package to log specific error messages.

Which three actions should you perform in sequence? (To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.)

Answer:

QUESTION 215

You need to extract data from delimited text files.

What connection manager type would you choose?

A. A Flat File connection manager

B. An OLE DB connection manager

C. An ADO.NET connection manager

D. A File connection manager

Answer: A

QUESTION 216

Some of the data your company processes is sent in from partners via email.

How would you configure an SMTP connection manager to extract files from email messages?

A. In the SMTP connection manager, configure the OperationMode setting to Send And

Receive.

B. It is not possible to use the SMTP connection manager in this way, because it can only be used by SSIS to send email messages.

C. The SMTP connection manager supports sending and receiving email messages by default, so no additional configuration is necessary.

D. It is not possible to use the SMTP connection manager for this; use the IMAP (Internet

Message Access Protocol) connection manager instead.

Answer: B

QUESTION 217

You need to extract data from a table in a SQL Server 2012 database.

What connection manager types can you use? (Choose all that apply.)

A. An ODBC connection manager

B. An OLE DB connection manager

C. A File connection manager

D. An ADO.NET connection manager

Answer: ABD

QUESTION 218

In your SSIS solution, you need to load a large set of rows into the database as quickly as possible.

The rows are stored in a delimited text file, and only one source column needs its data type converted from String (used by the source column) to Decimal (used by the destination column).

What control flow task would be most suitable for this operation?

A. The File System task would be perfect in this case, because it can read data from files and can be configured to handle data type conversions.

B. The Bulk Insert task would be the most appropriate, because it is the quickest and can handle data type conversions.

C. The data flow task would have to be used, because the data needs to be transformed before it can be loaded into the table.

D. No single control flow task can be used for this operation, because the data needs to be extracted from the source file, transformed, and then loaded into the destination table.

At least three different tasks would have to be used–the Bulk Insert task to load the data into a staging database, a Data Conversion task to convert the data appropriately, and finally, an Execute SQL task to merge the transformed data with existing destination data.

Answer: C

QUESTION 219

A part of your data consolidation process involves extracting data from Excel workbooks.

Occasionally, the data contains errors that cannot be corrected automatically.

How can you handle this problem by using SSIS?

A. Redirect the failed data flow task to an External Process task, open the problematic Excel file in Excel, and prompt the user to correct the file before continuing the data consolidation process.

B. Redirect the failed data flow task to a File System task that moves the erroneous file to a dedicated location where an information worker can correct it later.

C. If the error cannot be corrected automatically, there is no way for SSIS to continue with the automated data consolidation process.

D. None of the answers above are correct.

Due to Excel’s strict data validation rules, an Excel file cannot ever contain erroneous data.

Answer: B

QUESTION 220

In your ETL process, there are three external processes that need to be executed in sequence, but you do not want to stop execution if any of them fails.

Can this be achieved by using precedence constraints? If so, which precedence constraints can be used?

A. No, this cannot be achieved just by using precedence constraints.

B. Yes, this can be achieved by using completion precedence constraints between the first and the second and between the second and the third Execute Process tasks, and by using a success precedence constraint between the third Execute Process task and the following task.

C. Yes, this can be achieved by using completion precedence constraints between the first and the second, between the second and the third, and between the third Execute Process task and the following task.

D. Yes, this can be achieved by using failure precedence constraints between the first and the second, and between the second and the third Execute Process tasks, and by using a completion precedence constraint between the third Execute Process task and the following task.

Answer: B

We offer standard exam questions of Microsoft 70-463 practice test. The standard exams are important if you have never taken a real exam. The accuracy of the Q&As are fully guaranteed and the number is enough to impact you passing the exam.

http://www.greatexam.com/70-463-exam-questions.html